This document is relevant for: Inf1, Trn3

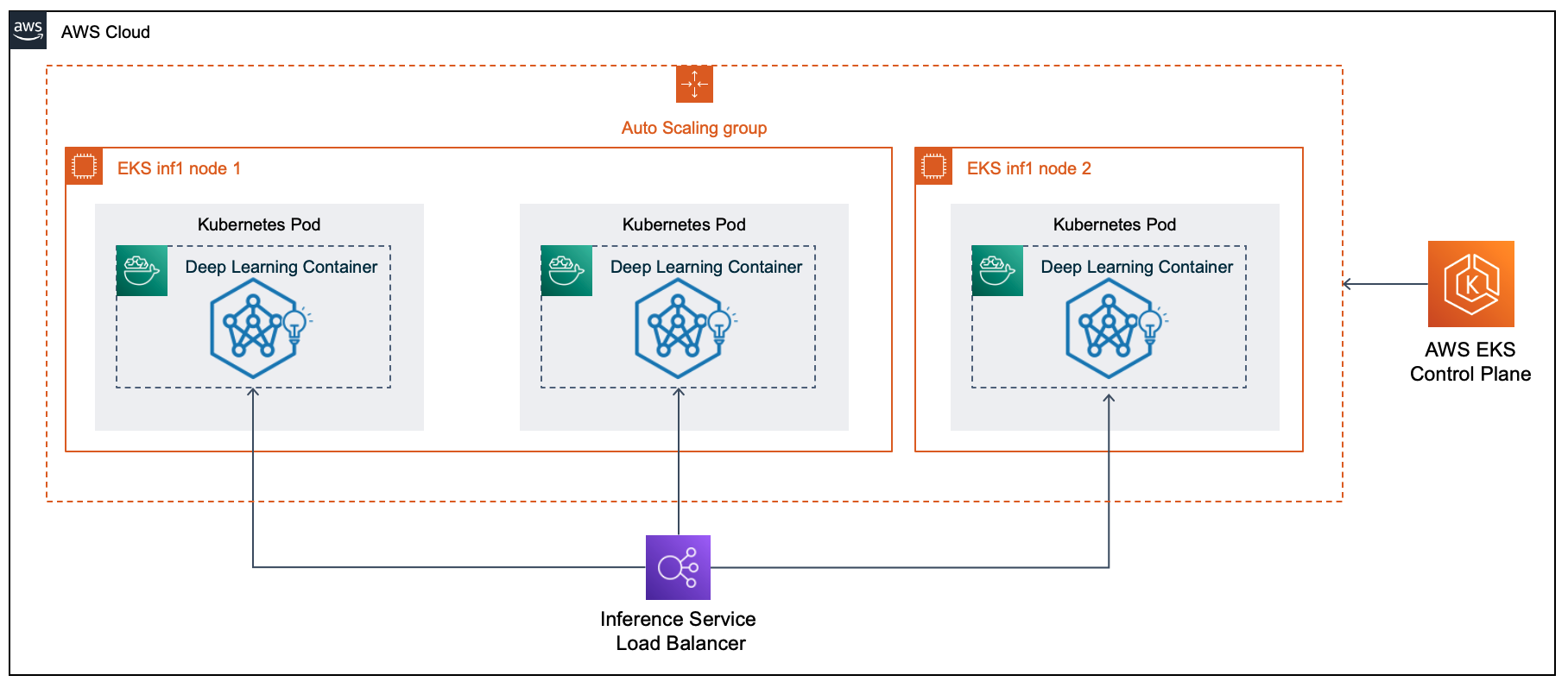

Deploy Neuron Container on Elastic Kubernetes Service (EKS) for Inference#

Description#

You can use the Neuron version of the AWS Deep Learning Containers to run inference on Amazon Elastic Kubernetes Service (EKS). In this developer flow, you set up an EKS cluster with Inf1 instances, create a Kubernetes manifest for your inference service and deploy it to your cluster. This developer flow assumes:

The model has already been compiled through Compilation with Framework API on EC2 instance or through Compilation with Sagemaker Neo.

You already set up your container to retrieve it from storage.

Setup Environment#

Please add inferentia nodes using instructions at EKS Setup for Neuron .

Using the YML deployment manifest shown in the EKS documentation for inferentia, replace the image in the containers specification with the one you built using Tutorial How to Build and Run a Neuron Container.

Note

Before deploying the yaml to your EKS cluster, make sure to push the image to ECR. Refer to Pushing a Docker image for more information.

Inference Example#

Please refer to Deploy a TensorFlow Resnet50 model as a Kubernetes service run a simple inference example. Note that the container image referenced in the YML manifest is created using Tutorial How to Build and Run a Neuron Container.

This document is relevant for: Inf1, Trn3