Where are the older NKI API reference pages?#

If you landed on this page, you probably followed a link to an older NKI API reference page. The NKI API reference documentation has been updated to match the NKI APIs that shipped with Beta 2 of the Neuron Kernel Interface in December 2025.

To view older versions of the NKI API docs, follow these steps:

Go to the AWS Neuron NKI API docs landing page: https://awsdocs-neuron.readthedocs-hosted.com/en/latest/nki/api/index.html

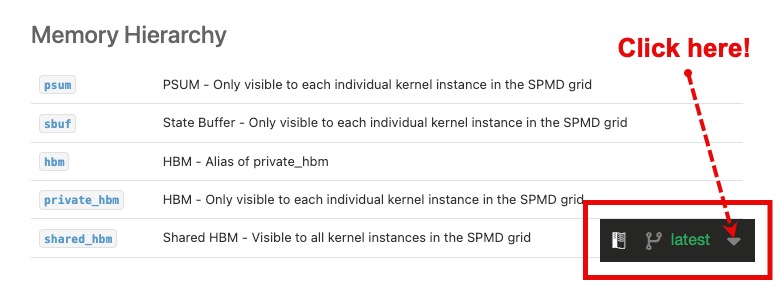

Click on the version selector dropdown at the bottom-right of the page (it will say latest by default).

Select the version that corresponds to the older NKI API docs you want to view. The last published version of the NKI API docs before the Beta 2 release is version 2.26.1.

Legacy NKI API reference pages#

AWS Neuron SDK version: 2.26.1 (last version before NKI Beta 2)

NKI API namespace |

Link |

|---|---|

|

|

|

|

|

|

|

|

NKI API common fields |

|

NKI API error documentation |