This document is relevant for: Inf1, Trn3

The Neuron scheduler extension is required for scheduling pods that require more than one Neuron core or device resource. For a graphical depiction of how the Neuron scheduler extension works, see Neuron Scheduler Extension Flow Diagram. The Neuron scheduler extension finds sets of directly connected devices with minimal communication latency when scheduling containers.

On Inf1 and Inf2 instance types where Neuron devices are connected through a ring topology, the scheduler finds sets of contiguous devices. For example, for a container requesting 3 Neuron devices the scheduler might assign Neuron devices 0,1,2 to the container if they are available but never devices 0,2,4 because those devices are not directly connected.

On Trn1.32xlarge and Trn1n.32xlarge instance types where devices are connected through a 2D torus topology, the Neuron scheduler enforces additional constraints that containers request 1, 4, 8, or all 16 devices. If your container requires a different number of devices, such as 2 or 5, we recommend that you use an Inf2 instance instead of Trn1 to benefit from more advanced topology.

Container Device Allocation On Different Instance Types

The Neuron scheduler extension applies different rules when finding devices to allocate to a container on Inf1 and Inf2 instances than on Trn1. These rules ensure that when users request a specific number of resources, Neuron delivers consistent and high performance regardless of which cores and devices are assigned to the container.

On Inf1 and Inf2 Neuron devices are connected through a ring topology. There are no restrictions on the number of devices requested as long as it is fewer than the number of devices on a node. When the user requests N devices, the scheduler finds a node where N contiguous devices are available. It will never allocate non-contiguous devices to the same container. The figure below shows examples of device sets on an Inf2.48xlarge node which could be assigned to a container given a request for 2 devices.

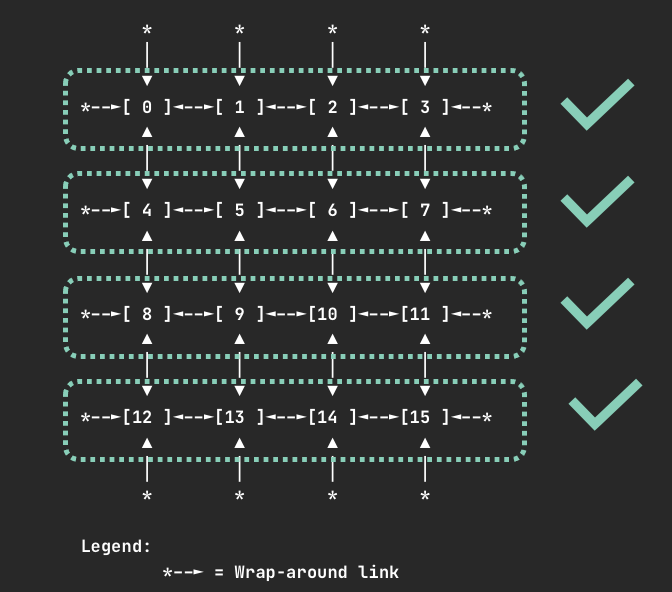

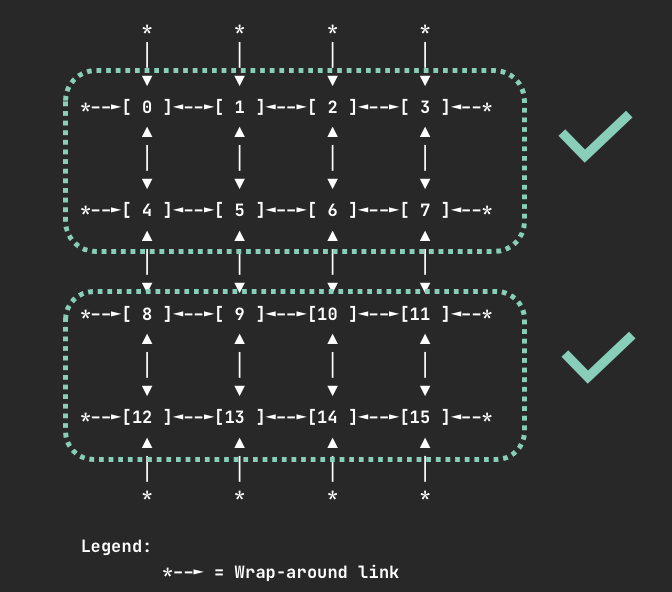

Devices on Trn1.32xlarge and Trn1n.32xlarge nodes are connected via a 2D torus topology. On Trn1 nodes containers can request 1, 4, 8, or all 16 devices. In the case you request an invalid number of devices, such as 7, your pod will not be scheduled and you will receive a warning:

Instance type trn1.32xlarge does not support requests for device: 7. Please request a different number of devices.

When requesting 4 devices, your container will be allocated one of the following sets of devices if they are available.

When requesting 8 devices, your container will be allocated one of the following sets of devices if they are available.

For all instance types, requesting one or all Neuron cores or devices is valid.

Deploy the Neuron Scheduler Extension

In cluster environments where there is no access to default scheduler, the neuron scheduler extension can be used with another scheduler. A new scheduler is added (along with the default scheduler) and then the pod’s that needs to run the neuron workload use this new scheduler. Neuron scheduler extension is added to this new scheduler. EKS natively does not yet support the neuron scheduler extension and so in the EKS environment this is the only way to add the neuron scheduler extension.

Make sure Neuron device plugin is running

Install the neuron-scheduler-extension

helm upgrade --install neuron-helm-chart oci://public.ecr.aws/neuron/neuron-helm-chart \ --set "scheduler.enabled=true" \ --set "npd.enabled=false"

Check there are no errors in the my-scheduler pod logs and the k8s-neuron-scheduler pod is bound to a node

kubectl logs -n kube-system my-scheduler-79bd4cb788-hq2sq

I1012 15:30:21.629611 1 scheduler.go:604] "Successfully bound pod to node" pod="kube-system/k8s-neuron-scheduler-5d9d9d7988-xcpqm" node="ip-192-168-2-25.ec2.internal" evaluatedNodes=1 feasibleNodes=1

When running new pod’s that need to use the neuron scheduler extension, make sure it uses the my-scheduler as the scheduler. Sample pod spec is below

apiVersion: v1 kind: Pod metadata: name: <POD_NAME> spec: restartPolicy: Never schedulerName: my-scheduler containers: - name: <POD_NAME> command: ["<COMMAND>"] image: <IMAGE_NAME> resources: limits: cpu: "4" memory: 4Gi aws.amazon.com/neuroncore: 9 requests: cpu: "1" memory: 1Gi

Once the neuron workload pod is run, make sure logs in the k8s neuron scheduler has successfull filter/bind request

kubectl logs -n kube-system k8s-neuron-scheduler-5d9d9d7988-xcpqm

2022/10/12 15:41:16 POD nrt-test-5038 fits in Node:ip-192-168-2-25.ec2.internal 2022/10/12 15:41:16 Filtered nodes: [ip-192-168-2-25.ec2.internal] 2022/10/12 15:41:16 Failed nodes: map[] 2022/10/12 15:41:16 Finished Processing Filter Request...

2022/10/12 15:41:16 Executing Bind Request! 2022/10/12 15:41:16 Determine if the pod %v is NeuronDevice podnrt-test-5038 2022/10/12 15:41:16 Updating POD Annotation with alloc devices! 2022/10/12 15:41:16 Return aws.amazon.com/neuroncore 2022/10/12 15:41:16 neuronDevUsageMap for resource:aws.amazon.com/neuroncore in node: ip-192-168-2-25.ec2.internal is [false false false false false false false false false false false false false false false false] 2022/10/12 15:41:16 Allocated ids for POD nrt-test-5038 are: 0,1,2,3,4,5,6,7,8 2022/10/12 15:41:16 Try to bind pod nrt-test-5038 in default namespace to node ip-192-168-2-25.ec2.internal with &Binding{ObjectMeta:{nrt-test-5038 8da590b1-30bc-4335-b7e7-fe574f4f5538 0 0001-01-01 00:00:00 +0000 UTC <nil> <nil> map[] map[] [] [] []},Target:ObjectReference{Kind:Node,Namespace:,Name:ip-192-168-2-25.ec2.internal,UID:,APIVersion:,ResourceVersion:,FieldPath:,},} 2022/10/12 15:41:16 Updating the DevUsageMap since the bind is successful! 2022/10/12 15:41:16 Return aws.amazon.com/neuroncore 2022/10/12 15:41:16 neuronDevUsageMap for resource:aws.amazon.com/neuroncore in node: ip-192-168-2-25.ec2.internal is [false false false false false false false false false false false false false false false false] 2022/10/12 15:41:16 neuronDevUsageMap for resource:aws.amazon.com/neurondevice in node: ip-192-168-2-25.ec2.internal is [false false false false] 2022/10/12 15:41:16 Allocated devices list 0,1,2,3,4,5,6,7,8 for resource aws.amazon.com/neuroncore 2022/10/12 15:41:16 Allocated devices list [0] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [0] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [0] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [0] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [1] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [1] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [1] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [1] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Allocated devices list [2] for other resource aws.amazon.com/neurondevice 2022/10/12 15:41:16 Return aws.amazon.com/neuroncore 2022/10/12 15:41:16 Succesfully updated the DevUsageMap [true true true true true true true true true false false false false false false false] and otherDevUsageMap [true true true false] after alloc for node ip-192-168-2-25.ec2.internal 2022/10/12 15:41:16 Finished executing Bind Request...

Make sure Neuron device plugin is running

Enable the kube-scheduler with option to use configMap for scheduler policy. In your cluster.yml Please update the spec section with the following

spec: kubeScheduler: usePolicyConfigMap: true

Launch the cluster

kops create -f cluster.yml kops create secret --name neuron-test-1.k8s.local sshpublickey admin -i ~/.ssh/id_rsa.pub kops update cluster --name neuron-test-1.k8s.local --yes

Install the neuron-scheduler-extension [Registers neuron-scheduler-extension with kube-scheduler]

helm upgrade --install neuron-helm-chart oci://public.ecr.aws/neuron/neuron-helm-chart \ --set "scheduler.enabled=true" \ --set "scheduler.customScheduler.enabled=false" \ --set "scheduler.defaultScheduler.enabled=true" \ --set "npd.enabled=false"

This document is relevant for: Inf1, Trn3