This document is relevant for: Inf1, Trn3

Bring Your Own Neuron Container to Sagemaker Hosting (inf1)#

Description#

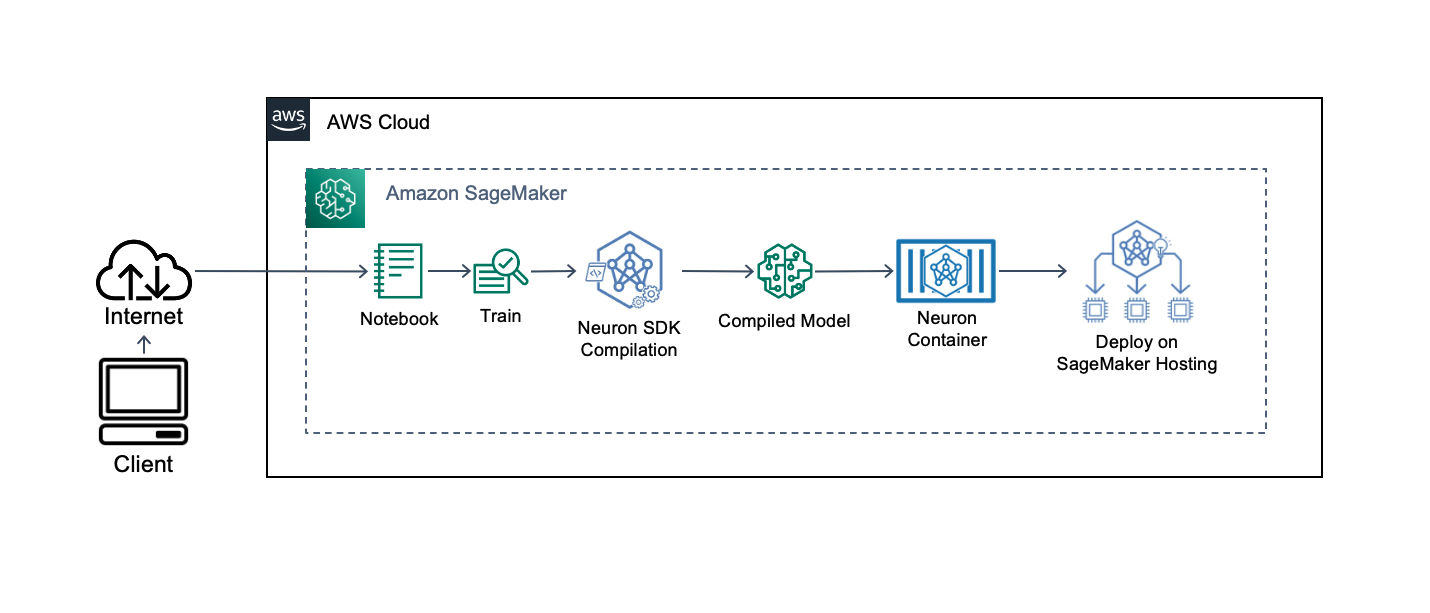

You can use a SageMaker Notebook or an EC2 instance to compile models and build your own containers for deployment on SageMaker Hosting using ml.inf1 instances. In this developer flow, you provision a Sagemaker Notebook or an EC2 instance to train and compile your model to Inferentia. Then you deploy your model to SageMaker Hosting using the SageMaker Python SDK. Follow the steps bellow to setup your environment. Once your environment is set you’ll be able to follow the BYOC HuggingFace pretrained BERT container to Sagemaker Tutorial .

Setup Environment#

- Create a Compilation Instance:

If using an EC2 instance for compilation you can use an Inf1 instance to compile and test a model. Follow these steps to launch an Inf1 instance:

Please follow the instructions at launch an Amazon EC2 Instance to Launch an Inf1 instance, when choosing the instance type at the EC2 console. Please make sure to select the correct instance type. To get more information about Inf1 instances sizes and pricing see Inf1 web page.

Select your Amazon Machine Image (AMI) of choice, please note that Neuron supports Ubuntu 18 AMI or Amazon Linux 2 AMI, you can also choose Ubuntu 18 or Amazon Linux 2 Deep Learning AMI (DLAMI)

After launching the instance, follow the instructions in Connect to your instance to connect to the instance

If using an SageMaker Notebook for compilation, follow the instructions in Get Started with Notebook Instances to provision the environment.

It is recommended that you start with an ml.c5.4xlarge instance for the compilation. Also, increase the volume size of you SageMaker notebook instance, to accomodate the models and containers built locally. A volume of 10GB is sufficient.

Note

To compile the model in the SageMaker Notebook instance, you’ll need to update the conda environments to include the Neuron Compiler and Neuron Framework Extensions. Follow the installation guide on the section how-to-update-to-latest-Neuron-Conda-Env to update the environments.

- Set up the environment to compile a model, build your own container and deploy:

To compile your model on EC2 or SageMaker Notebook, follow the Set up a development environment section on the EC2 Setup Environment documentation.

Refer to Adapting Your Own Inference Container documentation for information on how to bring your own containers to SageMaker Hosting.

Make sure to add the AmazonEC2ContainerRegistryPowerUser role to your IAM role ARN, so you’re able to build and push containers from your SageMaker Notebook instance.

Note

The container image can be created using Tutorial How to Build and Run a Neuron Container.

This document is relevant for: Inf1, Trn3